Human-Centered AI in Healthcare: Fostering Transparency and Autonomy

A proposal leveraging systems and cybernetics theory to better integrate AI into healthcare, enhancing information transparency and empowering doctors. This project explores how AI can improve communication and decision-making between clinicians and patients, particularly in palliative care, thereby enhancing the overall user experience. This is a speculative project focused on fostering discussions on how guidelines for AI integration can ensure that it is used in a way that remains beneficial, ethical, and centered on human values.

Project Context and Background

Globally, we are witnessing the integration of both emerging and established technologies across various industries, serving as tools to enhance human operations. Quantum computing is now being applied in cryptography, where it is being used to crack currently considered secure codes; Augmented reality is creating a transformative impact on the retail industry, by allowing customers to visualize products in a real-world context through their devices. As industries evolve with the rapid adoption of new technologies, the healthcare system is transitioning from a labor-driven, technology-enabled model to a digital-driven, human-centered one. However, the rapid advancement of AI presents challenges in maintaining effective communication and decision-making within the healthcare sector. This project aims to bridge that gap by proposing a human-centered AI framework that enhances user experience for both healthcare professionals and patients.

In recent years, the introduction of AI systems in hospital settings has grown due to their superior information processing and pattern analysis capabilities.

For example, in 2017, Stanford University Hospital implemented an AI system using deep learning to predict patients' 3-12 month mortality rates, aiming to identify those who would benefit from palliative care. Each morning, the system provides on-call physicians with a list of such patients. Statistics show the positive changes that resulted just by implementing the help of AI to aid doctor decision making. Despite 80% of Americans wanting to spend their final days at home, only 20% were able to do so in 2017. However, by 2019, homes became the predominant location for end-of-life care in the U.S.

This AI system prompts doctors to initiate crucial end-of-life care discussions, potentially enhancing patient care. However, it also raises important concerns regarding the algorithm's influence within hospital settings. Delicate conversations about life, death, and well-being can become more complex with an algorithm's involvement, creating challenging gray areas for doctors and patients. This case study raises two key questions: How much should AI influence clinical decision-making in palliative care? And what are the differing goals between patients and clinicians in these discussions?

Further Research

In 2024, a survey of 1,081 physicians by the American Medical Association highlighted the "guarded enthusiasm" physicians have towards AI applications in healthcare, reflecting both optimism and caution. While the overarching attitude towards AI is positive, 41% of the surveyed physicians stated “they were both equally excited and concerned about potential uses of AI in healthcare”. Dr. Jesse M. Ehrenfeld, co-chair of the Association for the Advancement of Medical Instrumentation’s AI committee stressed that the design, development and deployment of AI in healthcare must be above all else “equitable, responsible and transparent”.

Research and Analysis

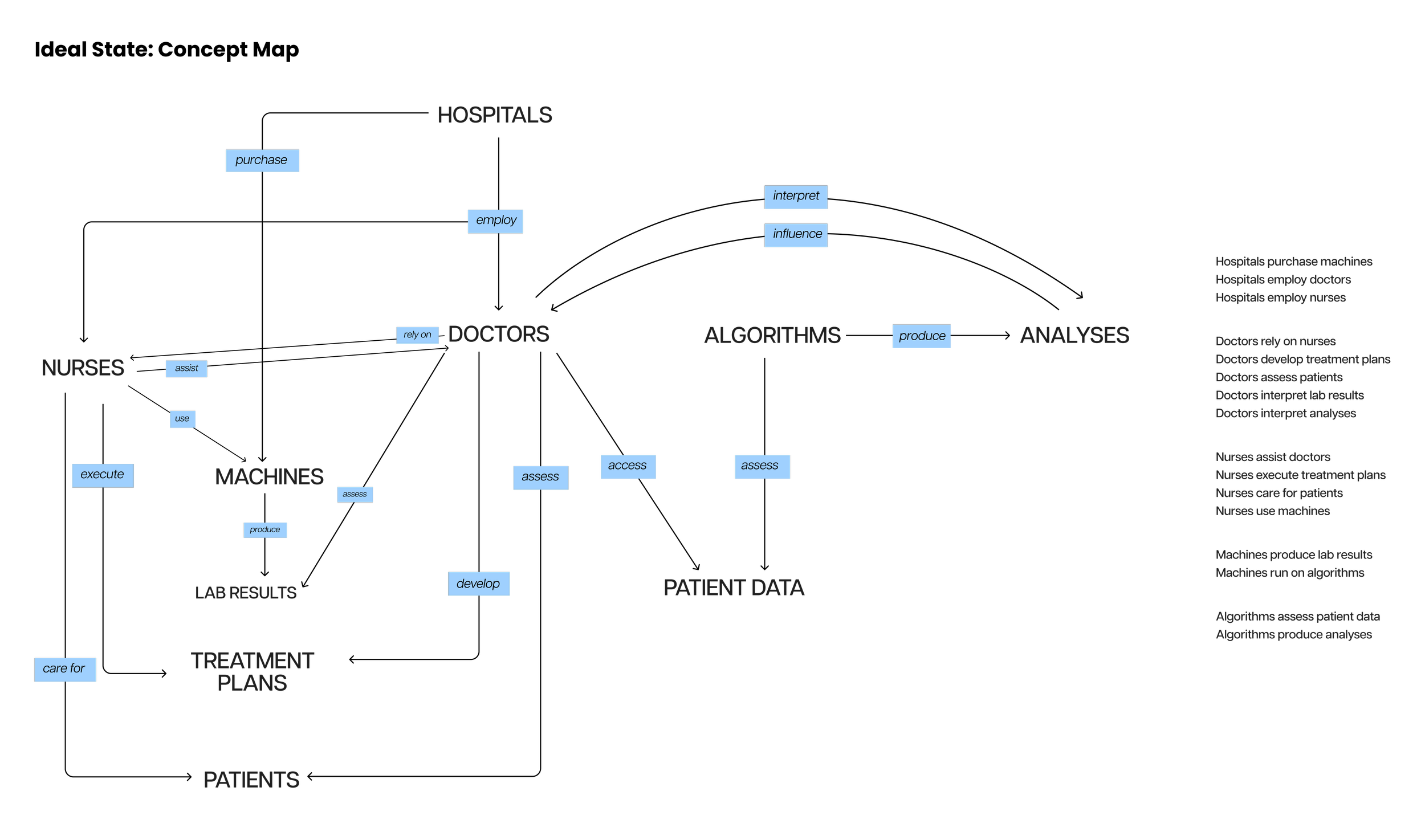

To explore these questions, I created a comprehensive goals-and-means diagram to map the current AI integration landscape in hospitals. We found that while communication between doctors and patients thrives during palliative care evaluations, it is notably lacking at other stages of the healthcare journey.

Competencies

Systems Thinking, UX Research, Cybernetics Design, Ethical Design, Information Architecture, Strategic Communication

Project Duration

Spring 2023 (4 weeks)

“What we explicitly said to clinicians was: ‘If the algorithm would be the only reason you’re having a conversation with this patient, that’s not good enough of a reason to have the conversation—because the algorithm could be wrong”

Problem Statement

The current challenge in integrating AI into healthcare is the lack of effective communication and decision-making support for clinicians and patients, especially in palliative care. Although the results thus far are promising, the rapid integration of AI into critical decision-making processes raises significant ethical and practical concerns. Specifically, the influence of AI on doctor-patient interactions and decision-making could lead to unintended consequences, such as over-reliance on algorithmic predictions or the erosion of trust between patients and healthcare providers.This project focuses on addressing these user needs: Clinicians need transparent and comprehensible AI insights to make informed decisions; and patients need clear communication and full understanding of their care options.

Despite AI's crucial role and extensive access to patient information, significant gaps exist in its interaction with the hospital ecosystem. The AI system functions somewhat in isolation, prompting a need to evaluate opportunities for greater synergy within the healthcare framework.

The concept map and goals-and-means diagram highlight a fundamental misalignment between the objectives of the algorithms and the intentions of the doctors who rely on them. The algorithms produce explicit results through ambiguous means, presenting lists of "high-risk" patients without clear explanations. This "black box" nature of AI makes it challenging for doctors to understand and trust its judgments, impacting their decision-making process.

Project Overview

Proposed Solution

In the ideal state of AI integration in hospitals, comprehensive dialogue would occur among algorithms, medical professionals, and patients. Currently, the algorithm overly influences doctors' decisions by guiding them towards certain patients.

The proposed intervention involves a redesigned algorithm that avoids vague terms like "high-risk patients" or "likelihood of dying." Instead of generating ambiguous lists, the algorithm would provide detailed analyses of patient records, including relevant biomarkers, in a format easily understood by doctors. This approach aims to make the algorithm a more passive influencer in decision-making, enhancing doctors' autonomy. Additionally, to improve transparency, these analyses should be accessible to all doctors through shared databases.

Outcome and Impact

The goal of this proposed framework is to help maintain the positive aspects of AI integration while mitigating potential risks. The framework proposed in this project seeks to maintain the benefits of AI integration while addressing the concerns raised. It emphasizes transparency, accountability, and continuous dialogue between AI systems, healthcare professionals, and patients to ensure that the use of AI remains aligned with ethical standards and human well-being.